QUOTED HERE AT SIMANAITISSAYS, A.I. machines “are hard-wired for bullshit. They aren’t designed to be accurate—they are designed to sound accurate.” And among human endeavors, mathematics is one that values accuracy. “If A, then B” means no less than causality—not correlation, not “sorta.” See, for example, “Four Things Logicians Don’t Want You to Know.”

Thus, Steve Lohr describes, “A.I. Can Write Poetry, But It Struggles With Math,” The New York Times, July 23, 2024.

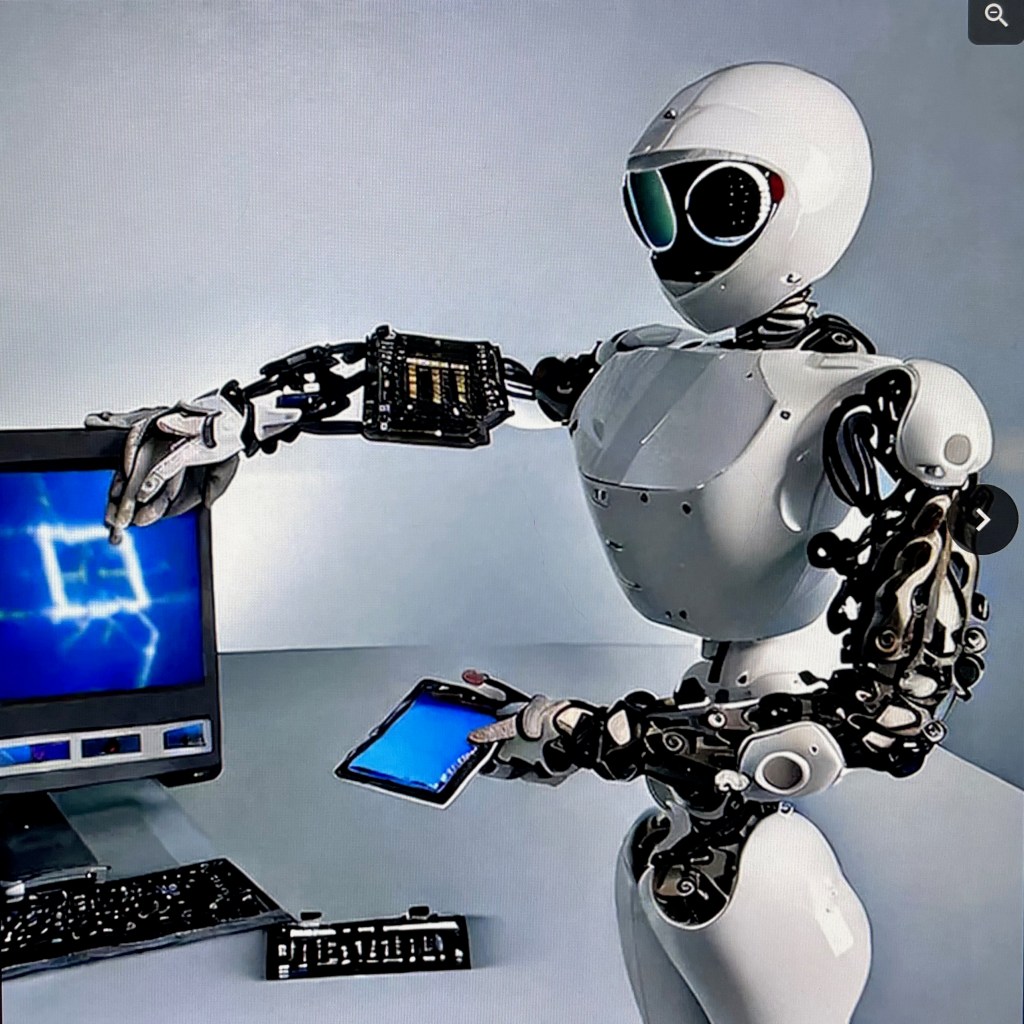

Image by Sean Dong from The New York Times.

Lohr writes, “In the school year that ended recently, one class of learners stood out as a seeming puzzle. They are hard-working, improving and remarkably articulate. But curiously, these learners—artificially intelligent chatbots—often struggle with math…. The world’s smartest computer scientists, it seems, have created artificial intelligence that is more liberal arts major than numbers whiz.”

How Come? An A.I. Large Language Model, an LLM for short, is patterned after the human brain’s neural network. Unlike traditional computer logic, Lohr recounts, “A.I. is not programmed with rigid rules, but learns by analyzing vast amounts of data. It generates language, based on all the information it has absorbed, by predicting what word or phrase is most likely to come next — much as humans do.”

A.I. does these predictions, however, without a human’s awareness of self and of the world around it. And, as Lohr says, “At times, A.I. chatbots have stumbled with simple arithmetic and math word problems that require multiple steps to reach a solution, something recently documented by some technology reviewers. The A.I.’s proficiency is getting better, but it remains a shortcoming.”

Grades Are Improving, But…. Lohr writes, “Math is an ‘important ongoing area of research,’ OpenAI said in a statement, and a field where its scientists have made steady progress. Its new version of GPT achieved nearly 64 percent accuracy on a public database of thousands of problems requiring visual perception and mathematical reasoning, the company said. That is up from 58 percent for the previous version.”

Geez, I used to assess math proficiency and a 64 was a middle D, not a huge jump from a failing 58.

Lohr recounts, “The A.I. chatbots often excel when they have consumed vast quantities of relevant training data—textbooks, drills and standardized tests. The effect is that the chatbots have seen and analyzed very similar, if not the same, questions before. A recent version of the technology that underlies ChatGPT scored in the 89th percentile in the math SAT test for high school students, the company said.”

This is akin to saying, “He got to look at the tests over and over again—and got a B+.” So much for the LLM concept….

Let’s Add Some Rule-based Software. One approach is to assist the LLM with computational subroutines: It pauses in its Internet scraping and tells the user “doing math,” then returns with a more sharply defined response.

However, as Lohr notes, “… there are skeptics who question whether adding more data and computing firepower to the large language models is enough. Prominent among them is Yann LeCun, the chief A.I. scientist at Meta. The large language models, Dr. LeCun has said, have little grasp of logic and lack common sense reasoning. What’s needed, he insists, is a broader approach, which he calls ‘world modeling,’ or systems that can learn how the world works much as humans do. And it may take a decade or so to achieve.”

And might world modeling include a sense of morality as well? ds

© Dennis Simanaitis, SimanaitisSays.com, 2024